The European AI act: a critical review of what you’ve heard in the news

28 June 2023

In the last weeks you might have heard that Europe adopted the first regulations on Artificial Intelligence (AI). We reviewed these regulations put forward in the European parliament. In this blogpost we will describe some of the general implications for business and give some much-needed nuances to the messages that have been circulating in the media.

As a first point it’s important to understand that the rules adopted by the European parliament are not yet the final version of the Act. Therefore, we cannot conclude exactly when and what will be put in place. However, we can get a view on the general vision and motivations of the European legislators. In addition, we know that Europe is motivated to have these AI regulations finished sooner rather than later. Let’s have a look at the timeline of the AI Act, what rules are put forward in the act and how this will impact the development of AI-systems in Europe.

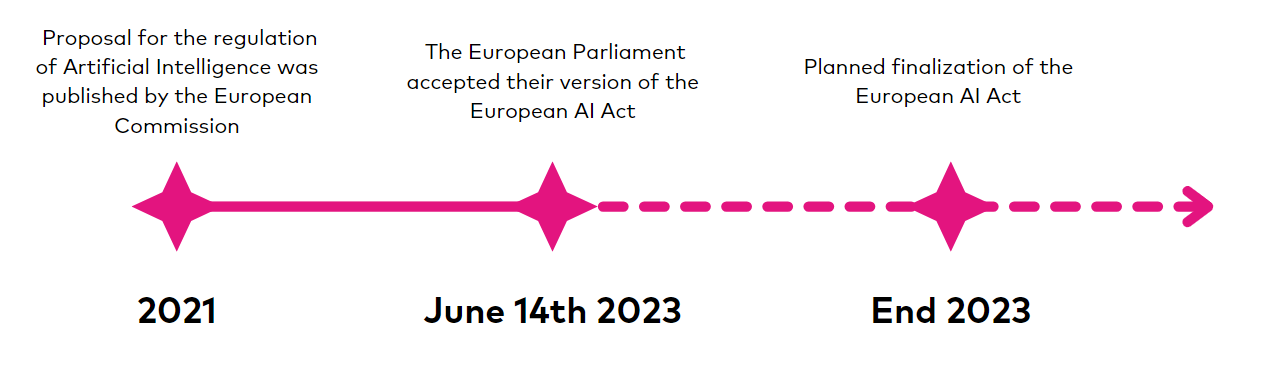

Timeline of the AI Act

The timeline of the European AI Act began in 2021 as a proposal for the regulation of Artificial Intelligence was published by the European commission. This proposal was followed by public consultations and studies to gather feedback in the same year. The swift progress in AI, exemplified by breakthroughs like ChatGPT, highlight the urgency for updated regulations. On June 14th 2023, the European Parliament accepted their version of the Act, but the final version still requires negotiations with the Council, and the Commission. Ultimately these three parties need to agree upon the final version of the Act. Following finalization, the Act will prompt the development of implementation standards, guiding businesses and organizations to comply with its requirements. While the specific applicability date is uncertain, the European Parliament aims to finalize the AI Act by the end of 2023, paving the way for subsequent development of enforceable standards starting from 2024.

Striking a balance: somethings we can do, others we can not

The AI Act establishes four categories of AI applications ranging from unacceptable risk to minimal risk. The first group consists of the AI applications with unacceptable high risks, and are therefor prohibited by the EU. These applications are the most intrusive and are mostly used by the government against their citizens. Some examples of these AI-systems are the social scoring system in China and biometric-based live identification. Companies failing to comply with this ban will risk penalties of €30 million or 6% of income, whichever is higher.

Other applications with a high chance of impacting the course of individual lives will be allowed but are strictly regulated. This second group is called the “high-risk applications”. These models are characterized by a direct impact on the lives of natural persons. This means that these types of models have a say in, amongst other things, employment opportunities, salaries, promotions, loan eligibility, and the exercise of freedoms and rights. The EU mandates meticulous development of high-risk models, encompassing comprehensive governance policies, robust data quality processes, and thorough risk management assessments. Failure to establish these systems may result in fines of up to either €30 million or 6% of the company's income.

Another cornerstone of the AI Act is the transparency around AI-systems. Natural persons need to know when they are subject to or interacting with an AI-model. This requirement get increased attention in the context of generative AI, the third category. This category, often called medium-risk by the media, includes chatbots and Deepfakes. When a company fails to provide this level of transparency, it may be liable for fines of up to €20 million or 4% of its income.

The fourth category of less intrusive models are not explicitly covered by the regulation and, therefore, do not face additional regulatory measures from this act. However in all cases, including this fourth category, the provision of incorrect, incomplete, or misleading information about AI systems to the dedicated authorities will result in fines of up to either €10 million or 2% of the company's income, whichever is higher.

Will this legislation stop innovation?

Regulations are often seen as a burden on innovation. However, we believe that the regulations surrounding AI should not reduce the appetite of companies to explore the useful applications of AI. One primary reason is that a significant portion of AI applications falls outside the scope of the AI Act and therefore are not subject to extra regulatory pressure.

For the models in scope the regulatory requirements could be seen as an additional burden. Nevertheless, we argue that this is not totally warranted. The high risk applications have the potential to have a serious impact on clients and business both positively and negatively. Therefore, from a business perspective, it is desirable to establish comprehensive governance policies, robust data quality processes, and thorough risk management assessments to ensure that AI models align with business goals, irrespective of regulatory mandates. Nevertheless the need to register and certify these types of model could become a bottleneck for putting these models in production, even if adequate financial and human resources of the certifying bodies is required by the Act.

To further prevent stagnation of AI-innovation, the AI Act also outlines concrete policies to support the development of AI systems. Each national authority is mandated to establish an AI-development environment which can be used by companies to train and test their AI-systems.

Conclusion

While the final details of the AI Act remain uncertain, Europe's message is clear: prioritize accurate and fit-for-purpose models that are well-governed. The ultimate goal is to develop applications that benefit your business, clients, and society as a whole. To achieve this, it is crucial to understand AI, governance, data quality, and how to effectively integrate the model's predictions into your company processes, regardless of legal requirements. Need any help with those tasks and how this can be aligned with your business processes? Do not hesitate to contact Datashift, we're always ready to listen!