What an event-driven architecture brings to the table to solve your data ingestion challenges

5 July 2022

Every day you work hard to deliver value to your customers. The processes that enable you to do so generate ever-increasing amounts of data. The day you start looking for an analytical tool to get more value out of your data and understand what's on offer in today’s landscape, you know you're spoiled for choice.

But wait, let’s not get ahead of ourselves. Before generating any insights from your data, you need to make sure it’s available for analysis. And you don’t want the activity of your data science team to downgrade the performance of the applications your customers and employees rely on. So, first things first: before anything else, you need to move your data from an operational to an analytical environment - a process commonly referred to as data ingestion. The success of such a data ingestion process hinges on its timeliness, performance, and cost-effectiveness.

What's so challenging about data ingestion?

When designing an ingestion process, you want to decide at which frequency data need to be ingested in order to produce timely insights. What constitutes timeliness depends on the use case. For example in parts of your HR administration, the fact that changes are being made during the day may not be relevant for your analytical purposes. All that matters is the state of the system at the end of the day. A common approach in this case is to ingest the data once every 24 hours, usually at night. In cases where users need intraday analytical feedback to their actions, you run the ingestion multiple times per day. Increasing the ingestion frequency in this way brings us to a trade-off between timeliness and performance.

Ingesting large amounts of data in large batches creates peaks in load on databases and networks. This may impact the performance of applications that rely on these resources, which is another reason why we commonly ingest data when operational usage is low, e.g. at night. But sometimes there are no low usage periods. This happens for example in a business that operates across multiple time zones. So if we do want to ingest data more frequently, what else can we do?

Loading less data in every batch is a common way to optimize performance. We do this by only ingesting what's different since the last time the data were ingested. A common case in which we can leverage this optimization, is a database table with a field that identifies when a record was last changed. However this kind of identification mechanism may not be available. There may be no such field, or no performant way to query it. If we don't own the database or can't make changes to it for any other reason, we're stuck with ingesting all the data in every batch. In that case, a majority of the cost of running data ingestion goes to copying data that are already available in the analytical environment. Given that today's applications produce more data than ever before, it's not hard to see why this is not a great idea. When we have to frequently ingest large batches of mostly the same data, we get low marks for cost-effectiveness, performance and timeliness. Surely we can do better.

What do you really want to achieve?

Let’s take a step back. In the end, all you want to achieve is to move data from an operational to an analytical environment. What you’d really like to do, if possible, is to replicate changes as they happen in your operational environment. Fortunately, there is an elegant solution to achieve just that. It’s called event-driven data ingestion.

How event-driven data ingestion works

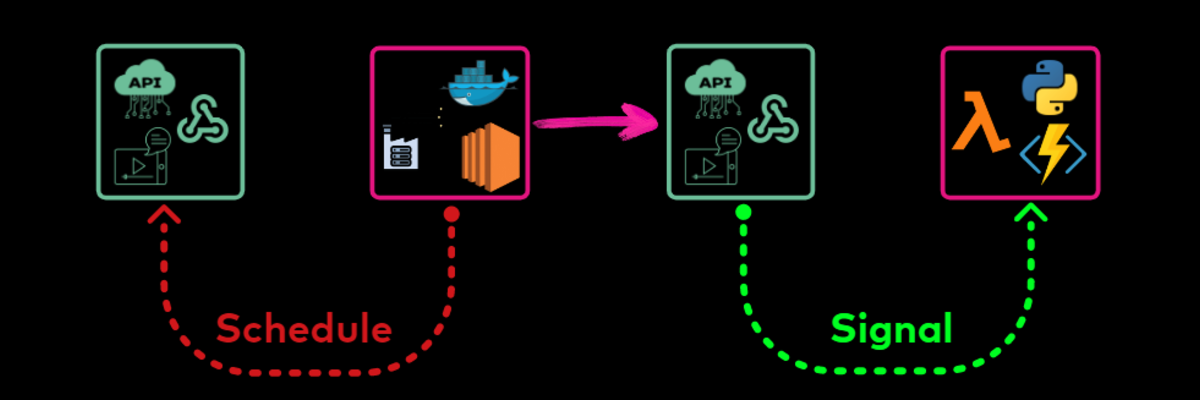

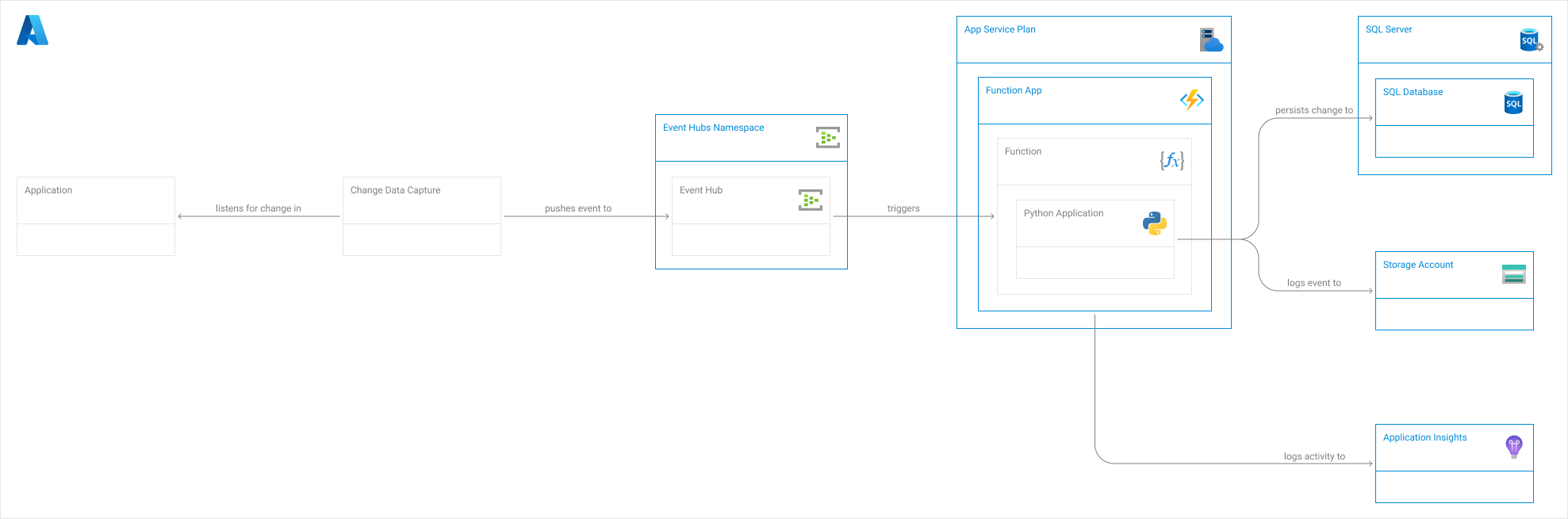

Event-driven data ingestion is enabled by an event-driven architecture consisting of event producers, event buses, and event consumers.

To start with, any application that contains potentially valuable data needs to become an event producer. And while there are several ways to achieve that, the actual approach will depend mainly on how the application produces and stores its data. One common method is to set up a process that captures changes in the database behind the application. Then, that process will send out events on every insert, update or delete in the database as these operations happen.

An event bus then mediates the flow between event producers and event consumers. Suppose, for example, an event consumer had a temporary outage. Without an event bus, any events that reach the event consumer during this outage are not processed. An event bus helps you avoid such data loss by keeping events safe until the event consumers are ready to process them.

What you want event consumers to do with those events depends on your use case. For example, you can have the events written to a data lake and use that as a cost-effective long-term event log. Or you can use the events to continually update the state of your analytical database and feed your business dashboards from there. Or maybe you want streaming analytics to get insights you can act on in near real-time.

The impact of an event-driven data ingestion process

With this event-driven architecture, we have gone from ingesting data at intervals as long as 24 hours, to ingesting events the moment they happen. This is quite the leap in terms of timeliness. A second great benefit is that data ingestion now puts a much more predictable and consistent load on our systems. This minimizes the risk of inadvertently impacting source application performance. Lastly, by leveraging today's cloud environments, being event-driven implies that we can scale throughput and cost on a moment-to-moment basis. This results in a highly cost-effective solution that is always just as performant as it needs to be.

Need a hand?

Do you recognize some of the challenges we discussed and want to understand how your business might tackle them? Just reach out, and we'll be happy to help.